Dec 16th 2025

Mark Sujew, Dr. Miro Spönemann

Xtext, Langium, what next?

What we’ve learned from building large-scale language tooling with Langium and Xtext, and a first glimpse at a new high-performance language engineering toolkit.

Updated June 13th, 2025 with new announcement information & development updates for June.

We see huge potential in the combination of domain-specific languages (DSLs) and AI technologies such as large language models (LLMs). AI-based assistants can support users by suggesting completions in a DSL editor, answering questions in a chat, or generating DSL text according to user requirements. What’s more, DSLs can help bring clarity, predictability and conciseness to applications that already involve LLMs.

Today, we’d like to introduce Langium AI: a toolbox for applications leveraging both LLMs and DSLs. Langium AI simplifies building AI applications with Langium DSLs. It lowers the barrier to entry by making it straightforward to measure quality & performance of LLMs with regards to your DSL. This, in turn, helps your AI projects succeed with less effort & risk by leveraging the best parts of Langium. Not only this, but it increases the value of any Langium DSL without requiring you to change anything about your existing language.

We’re also proud to announce that Langium AI is a part of Eclipse Langium, which is an Eclipse Foundation project. Eclipse Langium is a highly popular framework for building DSLs for modern IDEs and web applications. From the start, we wanted to have Langium AI complement Langium itself, not just in name, but by extending Langium’s success into the AI space. We believe that making Langium AI a part of Eclipse Langium is a great way to ensure this success, and to reiterate our commitment to the project as a whole. In turn, Langium AI is governed by the Eclipse Foundation with an engaging & open community of maintainers from various organizations. This was a natural choice going forward, and we’re looking forward to the benefits of this decision for the future of Langium AI.

Our announcement here explores our vision of Langium AI with a bit of background & context, the kinds of challenges that we want to solve with Langium AI, elaborating on the tools we’ve provided to achieve this vision, and concluding with a look into what’s next for Langium AI.

With the rise of AI tools like GitHub Copilot, it has become an expectation — not a luxury — that LLMs can work with code. That applies just as much to DSLs as it does to general-purpose languages. Users want to write DSL code faster, get helpful completions, ask questions in natural language, and even generate entire programs based on high-level instructions. But there’s a challenge: general-purpose LLMs aren’t trained on your DSL. Most DSLs live in specialized domains with few publicly available examples, meaning LLMs have little or no understanding of their syntax or semantics. This leads to a knowledge gap where models generate plausible-looking text that isn’t valid or meaningful in the context of your language.

At the heart of this problem is the missing connection between an LLM and the domain-specific knowledge embedded in your DSL. That’s where Langium AI comes in. Our goal is to support the grounding of LLMs on domain-specific knowledge — enabling AI systems to reason more accurately within the boundaries of your DSL and its domain. This grounding is essential if you want LLMs to implement features like editor completions, chat-based assistance, or DSL code generation. But it’s not trivial: LLMs generate text token by token based on prior context, without any built-in awareness of whether their output is syntactically correct or semantically valid. That’s why we built Langium AI — to provide tools that adapt the prediction process to the structure and rules of your DSL.

Langium AI makes the syntactic and semantic details consumable from an external context. Instead of reinventing the wheel for each AI application, Langium AI exposes Langium’s existing set of services as building blocks for evaluation and integration. This helps you build AI systems that understand your DSL, not just guess at it.

And as your DSL evolves, your AI tooling can evolve with it. By keeping Langium AI as a thin abstraction layer on top of your existing services, changes to the language flow naturally into your AI workflows. This allows DSL engineers and AI engineers to work more closely in parallel, without friction.

We’ve chosen to keep the framework lightweight and composable so it fits into any AI stack — rather than replace it. The technology in this space moves fast, and Langium AI is built to support that: feeding structured, robust data into whatever tools you choose to use today, or down the road.

In order to solve the aforementioned problems, we’ve produced a collection of tools with specific applications in mind. This allows us to keep the framework light, while also leveraging the power of Langium’s existing service set (parser, validations, etc.) to provide tooling for testing, evaluating, and building AI applications. It also makes it quite easy for us to extend the framework going forward by developing workflows around existing tools, or devising new tools where a need arises.

Currently, our efforts have been focused on these three core areas: Evaluation, Splitting and Constraint.

Evaluation gives a reliable way to evaluate DSL output from some system (AI, standalone model, etc.) and provide some quantitative information on that output. This ranges from raw diagnostics produced as part of Langium’s own validations, parser errors, warnings, and more complex metrics based on the DSL structure itself.

Splitting is focused on providing document processing utilities that respect the syntactic boundaries of DSL programs. This is useful for data-processing apps that expect to catalogue or ingest a large number of DSL programs, such as for search and database applications, as in the case of RAG.

Constraint is focused on restricting LLM token output. By deriving BNF-style grammars from a Langium grammar, we can automate the generation of constraints on output tokens from LLMs, which directly correspond to the valid syntax of the DSL.

Between these features, Langium AI aims to provide an initial lightweight, but powerful solution for building AI applications that need to work with, generate, or consume programs for Langium-based DSLs.

Whatever your model and stack is, Langium AI is designed to feed into that, rather than replacing it. This was a key decision made after a year of research and prototyping, and for us we settled on simpler is better. The technology moves so fast in this area that you can easily become obsolete within a month or two, if not weeks. Instead of trying to fight that, we support it. As your upstream tooling & stack changes over time, and as the models you choose to run with change, Langium AI can provide the same raw data that you’ll need.

Let’s dive a bit deeper into the tools here. Under the hood it’s quite simple. Of the tools we shared above, the evaluation & splitting tools are part of the new AI Tools (TypeScript package langium-ai-tools), while the constraint logic is available as a part of the Langium Core (langium-cli package). For the AI Tools, we split them up into a pair of imports currently, with the most recent chunk of work done on evaluation.

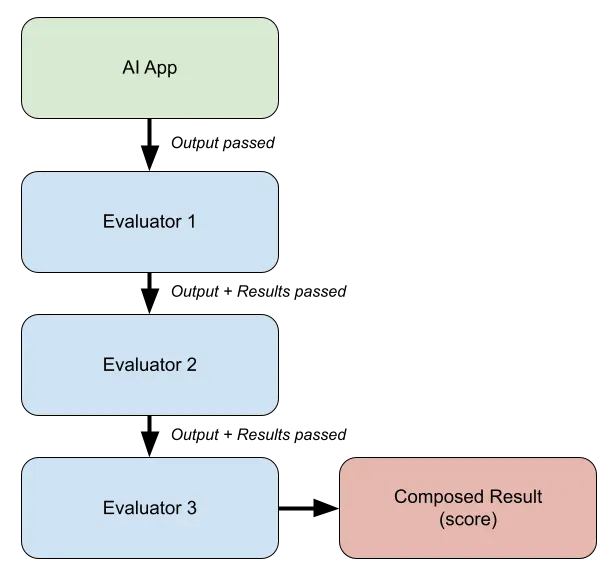

The evaluation process is composed of a series of Evaluator objects, which can be composed together to form a single evaluation pipeline. LLM output goes in (such as a generated DSL program), and evaluation results come out as a score. For composed evaluators, model output is passed through each stage one at a time, compositionally building up the final evaluation result. This approach lets us write several simple evaluators as strategies, and sequence them together to form more complex evaluation strategies.

The splitting process takes DSL documents, and breaks them apart based on some pre-defined rules. Since we have access to the abstract syntax (as TypeScript types & interfaces) we can define splitting rules that abide by the syntactic constructs in your DSL such as functions, classes, modules or any other construct you want to split on. We also have access to underlying offsets in the concrete syntax tree (that’s the original program text). From there, we can split programs up in any fashion that makes sense for our language. For example, we can detect comment blocks (such as function headers) and join those with their functions as a logical unit. This has obvious applications for RAG, but it is also useful to indexing & searching across large code bases, and also for citations in chat.

As of June, we’ve published our 0.0.2 release on npm under langium-ai-tools, which is designed to work with Langium 3.4.X languages. We’ve also updated our examples with more detailed information, added a program map export feature for workspaces (akin to repo maps), and are actively working on producing a set of guides for applying Langium AI in practice. We’ll be following up with a more detailed blog on practical application, so stay posted for that.

Enhancement is ongoing for the existing splitter & evaluator APIs, as well as adding support for exporting grammar related details (such as meta model constructs, etc.) to help with supporting more complex applications. A lot of this work has also shown itself to be helpful in improving Python-based workflows for fine-tuning & validation, as a good chunk of the existing tooling for ML and AI exists in that space.

We’re still pretty early in the process, but we’re already very happy with the results we’ve gotten both internally and in practice on actual applications. If you’re working on a Langium DSL, and need to build an AI app for it, then Langium AI should definitely be on your radar.

Ben is an experienced engineer with a background in programming language theory & full-stack development. He previously co-founded his own software company, and has extensive experience leading projects. He's passionate about facilitating team success, & solving complex problems. In his spare time you can find him working on DIY things, electronics, & gardening.

Daniel co-leads TypeFox, bringing a strong background in software engineering and architecture. His guiding principle is: Customer needs drive innovation, while innovation elevates customer experiences.

Miro joined TypeFox as a software engineer right after the company was established. Five years later he stepped up as a co-leader and is now eager to shape the future direction and strategy. Miro earned a PhD (Dr.-Ing.) at the University of Kiel and is constantly pursuing innovation about engineering tools.